"Carving Out" an Expert from Your B+ LLM🦄🤓

Remember the news article1 mentioning ChatGPT achieving a Bar exam score comparable to a junior lawyer or legal assistant?

In an experiment conducted by two law professors and two staff of legal tech company Casetext, GPT-4 scored 297 on the bar exam which, according to the researchers, places it in the 90th percentile of human test takers, a good enough result to be admitted to practice law in most US states.

If ChatGPT proves adept at handling legal matters, could it be likened to hiring a "good enough" legal counselor?

Assuming this is true, you might wonder that the training data of such a vast language model perhaps also includes the best legal expertise.

Wouldn't it be advantageous to obtain an expert "sub-model" that is more knowledgeable, more specialized, and less "average" to assist us further?

What is Self-Specialization?

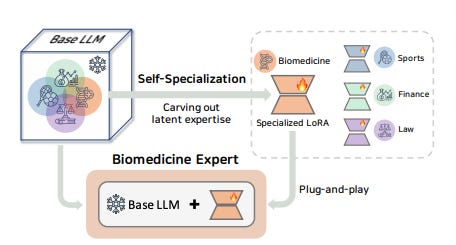

Self-specialization refers to the process of uncovering and developing latent expertise within a pre-trained LLM to make it more effective in a specific domain, such as biomedicine.

In contrast to general alignment, self-specialization focuses on leveraging domain-specific unlabelled data and a few labeled seeds for the self-alignment process, thereby "carving out" an expert model from a "generalist" pre-trained LLM. This approach has been shown to be very effective for improving zero-shot and few-shot performance in target domains of interest, offering an efficient way to tailor the LLM to specific expert domains.

How to do it?

Traditionally, in order to train an domain-expert LLM, you’ll need a set of well-curated, human-labeled data, ideally in large quantities.

This process often involves obtaining data from subject matter experts, such as legal cases, expert surveys or interviews, medical case studies and reports. However, this traditional approach can be expensive and time-consuming.

Well, innovative researchers came up with a brilliant solution - self-alignment.

By allowing LLMs to automatically generate instructional data from a handful of human-authored seeds, self-alignment presents a means to harness the internal general knowledge of these models (which results from extensive pre-training on the internet corpora without extensive human annotations.

Drawing inspiration from the foundational principles of self-alignment, self-specialization goes a step further by incorporating domain-specific seeds and external knowledge.

This approach integrates specialized seed instructions and is further augmented by a retrieval component. It’s goal is to guide models beyond generic alignment, directing them to generate data that are not just contextually fitting for a specialized domain but also maintain high degrees of accuracy.

To provide an overview of the process, it begins with a small set of human-authored, domain-specific seed instructions. These instructions act as the basis for crafting synthetic instructions and corresponding input contexts tailored to the specific domain, utilizing the base model.

In the response generation phase, responses are carefully curated based on the generated instruction and input pairs. This curation process is enriched by infusing domain-specific knowledge obtained through a retrieval component.

The culmination of the process occurs in the specialization phase, where the base model undergoes tuning with QLoRA2 to refine its expertise in the target domain. Conceptually, this process can be described as “unveiling latent expertise within Large Language Models (LLMs)”.

Performance

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.