What is Static Embedding and Why Should You Care?

Transformer-based models have been the go-to methods in AI ever since their introduction a few years ago. If you recall the earlier days of word embedding models like Word2Vec, GloVe, and ELMo, you'll remember how transformative the dawn of the attention mechanism was. This innovation has since become the foundation of most state-of-the-art AI architectures.

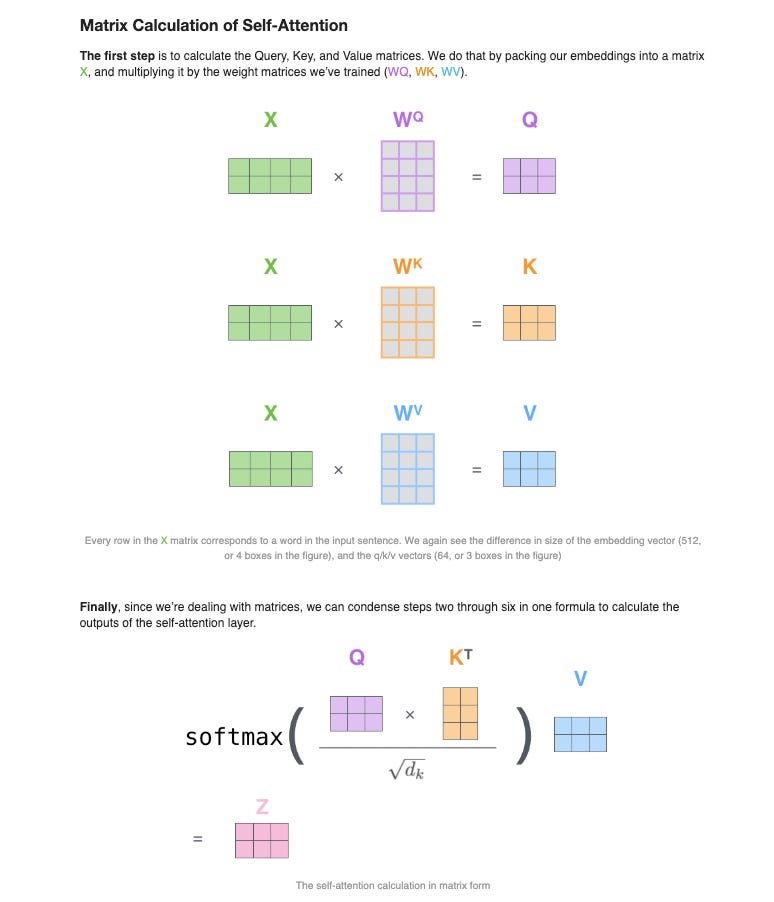

This approach allows each token to have a context-aware vector representation, defined not only by the token itself but also by its surrounding tokens. The classic example was, a transformer-based model would represent the word "bank" differently in the contexts of "river bank" and "financial institution bank."

That’s how they provide “contextual embeddings”.

However, static embeddings are making an unexpected comeback. This resurgence offers remarkable speed improvements with surprisingly small quality trade-offs, making static embeddings a compelling option for many applications.

In this post, we'll explore what static embeddings are, why they're gaining renewed attention, and why you should care about this development.

Understanding Static Embeddings

How the two differ?

Transformer-based models, which have become the go-to solution for many NLP tasks, use attention mechanisms to capture relationships between input tokens. However, authors of Model2Vec summarized:

While these models have shown state-of-the-art performance on a large number of tasks they also come with heavy resource requirements: large energy consumption, computational demands, and longer processing times. Although there are many ways in which you can make existing (Sentence) Transformers faster, e.g. quantization, or specialized kernels, they are still relatively slow, especially on CPU.

So perhaps, you want to ask:

What if we need to go faster and are working on a time-constrained product (e.g. a search engine), or have very little resources available?

This is when you might consider the static embeddings.

Static embeddings, unlike the contextual aware embeddings, are fixed representations of words or tokens, assigning each a single vector regardless of context. This means that a word like "bank" will have the same embedding whether referring to a financial institution or the side of a river. These embeddings are typically generated by models trained on large text corpora (GloVe, word2vec etc.), capturing general semantic relationships between words. However, they lack the ability to disambiguate words with multiple meanings based on context.

How Can Static Embeddings Help?

The renewed interest in static embeddings was sparked by MinishLab's introduction of the model2vec technique in October 2024. This approach achieved “a remarkable 15x reduction in model size and up to 500x speed increase” while maintaining impressive performance levels.

Model2Vec is a technique designed to create a compact, efficient static embedding model distilled from a larger sentence transformer. It involves processing a vocabulary through a sentence transformer to obtain token embeddings, reducing their dimensionality using Principal Component Analysis (PCA), and applying Zipf weighting to adjust for token frequency. During inference, it computes sentence representations by averaging the embeddings of constituent tokens. Although this approach results in uncontextualized embeddings, it achieves a balance between performance and computational efficiency, making it suitable for applications where resources are limited.

Benefits of Static Embeddings

The resurgence of static embeddings brings several compelling benefits:

Speed: Static embeddings can be significantly faster than transformer-based models, especially during the encoding process. In some cases, they can be up to 400x faster on a CPU compared to state-of-the-art embedding models.

Efficiency: Static embeddings require less computational power and memory, making them suitable for resource-constrained environments.

Model Size: Static embedding models are much smaller than their transformer-based counterparts, reducing storage requirements and enabling deployment in environments with limited capacity.

Comparable Quality: While there is a trade-off in performance, static embeddings can achieve surprisingly good results, sometimes s

till reaching 85% of transformer-level quality.

Static Embeddings in Practice

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.