What is MCP and Some Illustrated Examples

Imagine having your own personal AI assistant that could effortlessly manage your emails, schedule meetings, analyze data, and even code for you. We're closer than ever to making this a reality, thanks to the rapid advancements in large language models (LLMs) like GPT-4. But there's a catch – these AI marvels are still frustratingly limited in what they can actually do.

A recent viral concept - MCP, could be the unsung hero that's about to change everything in the world of AI. But what exactly is MCP, and why should you care?

What the Heck is MCP Anyway?

MCP, or Multimodal Conversational Protocol, is the latest buzzword taking the AI world by storm. But don't let the fancy name fool you – at its core, MCP is a brilliantly simple solution to a complex problem.

Professor Ross Mike, explains it best:

"Understanding MCP is really important, but you'll also realize the benefits and why it's sort of a big deal, but not really at the same time."

In essence, MCP is a new standard that allows LLMs (like ChatGPT) to seamlessly connect with external tools and services. Think of it as a universal translator that enables your AI assistant to speak fluently with databases, APIs, and a whole host of other digital services.

Current LLMs Are Like Brilliant Scholars Trapped in a Library.

To truly appreciate the significance of MCP, we need to understand the limitations of current LLMs. As Professor Mike points out:

"LLMs by themselves are incapable of doing anything meaningful. What do I mean by that? If you remember the first ChatGPT 3 or 3.5, if you just open any chatbot and tell it to send you an email, it won't know how to do that."

Imagine the smartest person you know, locked in a vast library with all the world's knowledge. They can answer questions and discuss any topic, but they can't actually do anything beyond the confines of that library. That's essentially what we're dealing with when it comes to current LLMs.

We Need to Move From Basic LLMs to Tool-Connected Assistants

The next step in AI assistant evolution was connecting LLMs to external tools. This allowed them to perform more practical tasks, like searching the internet or updating spreadsheets. However, this approach came with its own set of headaches.

Professor Mike illustrates the problem:

"You start to become someone who glues a bunch of different tools to these LLMs, and it can get very frustrating, very cumbersome. If you're wondering why we don't have an Iron Man level Jarvis assistant, it's because combining these tools, making it work with the LLM is one thing, but then stacking these tools on top of each other, making it cohesive, making it work together is a nightmare itself."

MCP Lets All Your Agentic Tools Speak the Same Language

This is where MCP comes in to save the day. Instead of dealing with a mishmash of different tools and APIs, each speaking its own "language," MCP acts as a universal translator.

Professor Mike breaks it down with a brilliant analogy:

"Think of every tool that I have to connect to to make my LLM valuable as a different language. Tool one's English, tool two is Spanish, tool three is Japanese, right? MCP you can consider it to be a layer between your LLM and the services and the tools, and this layer translates all those different languages into a unified language that makes complete sense to the LLM."

A Peek Under the Hood of How MCP Is Structured

To truly grasp the power of MCP, it's helpful to understand its basic structure. The MCP ecosystem consists of four main components:

MCP Client: This is the user-facing side, where the LLM interacts.

MCP Protocol: The standardized communication method between client and server.

MCP Server: Translates the capabilities of external services for the client.

External Service: The actual tool or API being accessed.

What Do MCPs Look Like?

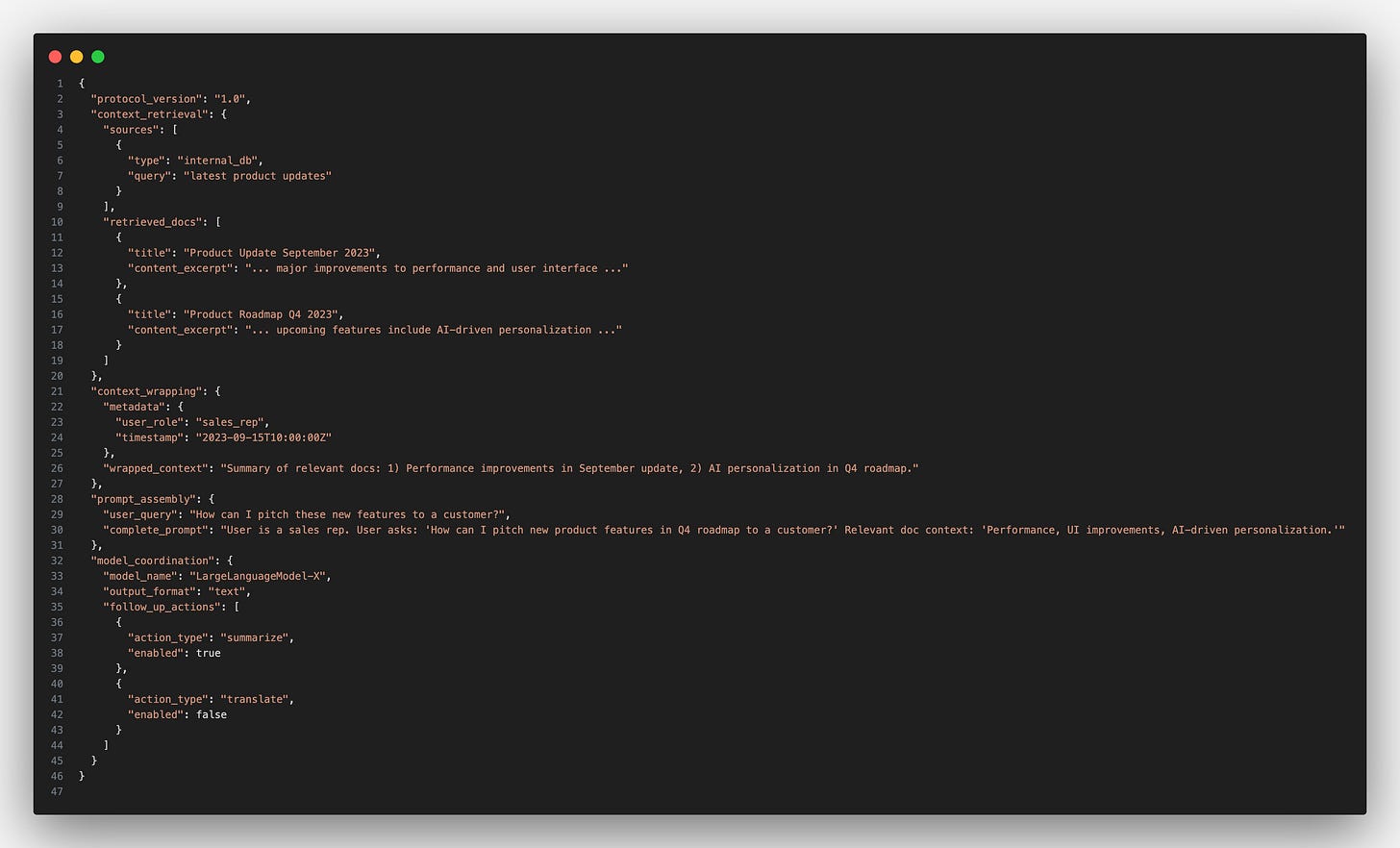

1) A Simple JSON-Based MCP Payload

Imagine you have a user querying a corporate knowledge base for recent product updates. MCP could define a JSON structure for how this conversation flow is packaged:

Explanation:

The protocol_version allows for backward compatibility.

context_retrieval details the sources for relevant documents.

context_wrapping compresses or summarizes those documents under wrapped_context.

prompt_assembly merges the user’s question with the curated context.

model_coordination tells the system which model to call and what format we want back.

2) A Multi-Step Chat Example

Consider an interactive chatbot that helps users analyze legal documents. MCP can define how each turn of the conversation is handled:

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.