Unlocking the Full Potential of LLMs with USER-LLM: Efficient Contextualization through User Embeddings

Large language models (LLMs) have revolutionized the field of natural language processing (NLP), offering unprecedented capabilities in understanding and generating human-like text.

However, as these models continue to grow in scale and complexity, effectively leveraging them for personalized user experiences has become a significant challenge.

User Embedding? USER-LLM?

User-embedding is not something new. But let’s take a look at “USER-LLM”, a novel framework developed by the research team at Google that aims to bridge the gap between the power of LLMs and the nuances of user behavior.

USER-LLM introduces a unique approach to contextualizing LLMs with user embeddings, unlocking new possibilities for personalized and efficient language-based applications.

The below diagram contrasts text prompt based QA and USER-LLM based QA:

Why USER-LLM?

The key to USER-LLM's success lies in its ability to address the inherent complexities and limitations of using raw user interaction data with LLMs.

Traditionally, fine-tuning LLMs directly on user interaction data, such as browsing histories or engagement logs, has been a straightforward approach. However, this data is often complex, spanning multiple user journeys, various interaction types, and potential noise or inconsistencies. This complexity can hinder an LLM's ability to identify and focus on the most relevant patterns, ultimately limiting its effectiveness in understanding and adapting to user behavior.

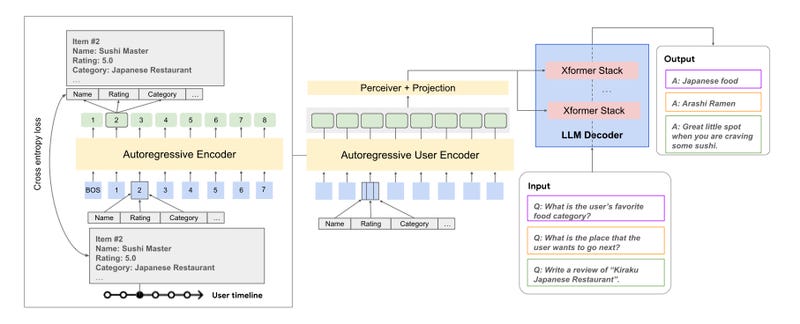

To overcome these challenges, USER-LLM employs a two-stage approach.

First, it uses a Transformer-based encoder to create user embeddings from multimodal, ID-based features extracted from user interactions. These embeddings capture the essence of a user's behavioral patterns and preferences, distilling the complex and noisy data into a compact, informative representation.

In the second stage, USER-LLM seamlessly integrates these user embeddings with the LLM using cross-attention mechanisms. By cross-attending the user embeddings with the intermediate text representations within the LLM, the model is able to dynamically adapt its understanding and generation to the specific user context. This approach empowers the LLM with a deeper comprehension of users' historical patterns and latent intent, enabling it to tailor responses and generate personalized outcomes.

Performance

The performance and efficiency benefits of USER-LLM have been evaluated across various public datasets and tasks against baselines (non-LLM) like dual encoder and Bert4Rec, on tasks including next item prediction, favorite category prediction, and review generation. The results are truly impressive, showcasing notable improvements over state-of-the-art, task-specific baselines.

One particularly finding is USER-LLM's ability to outperform traditional text-prompt-based LLM fine-tuning as the input sequence length increases (see below example for next movie prediction task). While the text-prompt approach struggles with longer sequences due to the increased data diversity and potential noise, USER-LLM consistently maintains its performance advantage. This discrepancy can be attributed to the inherent limitations of LLMs in handling extensive input contexts, which USER-LLM effectively addresses through its user embedding-based contextualization.

Moreover, USER-LLM's computational efficiency is a game-changer. By distilling user activity sequences into compact user embeddings,

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.