New Breakthroughs in Text Embedding 🚀!

Text embeddings play pivotal roles in Natural Language Processing (NLP) tasks such as modern information retrieval, question answering, and retrieval-augmented generation (RAG). From classics like GloVe and BERT to the latest state-of-the-art (SOTA) models, these embeddings encode semantic information, proving indispensable in various applications.

Text Embedding📽️

If you're new to the concept of embeddings, check out our quick 2-minute interview prep on this topic:

Concretely,

Text embeddings are vector representations of natural language that encode its semantic information. They are widely used in various natural language processing (NLP) tasks, such as information retrieval (IR), question answering, semantic textual similarity, bitext mining, item recommendation, etc.

From the renowned BERT to sophisticated multi-stage trained models like E5 and BGE, the objective is to capture the rich contextual details of natural language, balancing between training cost and efficiency. However, challenges arise when implementing complex multi-stage training with curated data, encompassing concerns related to quantity, cost, and the diversity of tasks.

Recently, a new option emerged from Microsoft research called E5-mistral-7b-instruct.

Idea

The concept appears intuitive: with powerful Large Language Models (LLMs) at our disposal and a need for training data for embedding models,

why not employ LLMs to create synthetic data?

The research team executed this idea by harnessing the capabilities of LLMs to produce synthetic data, encompassing a wide array of text embedding tasks across 93 languages and generating hundreds of thousands of instances.

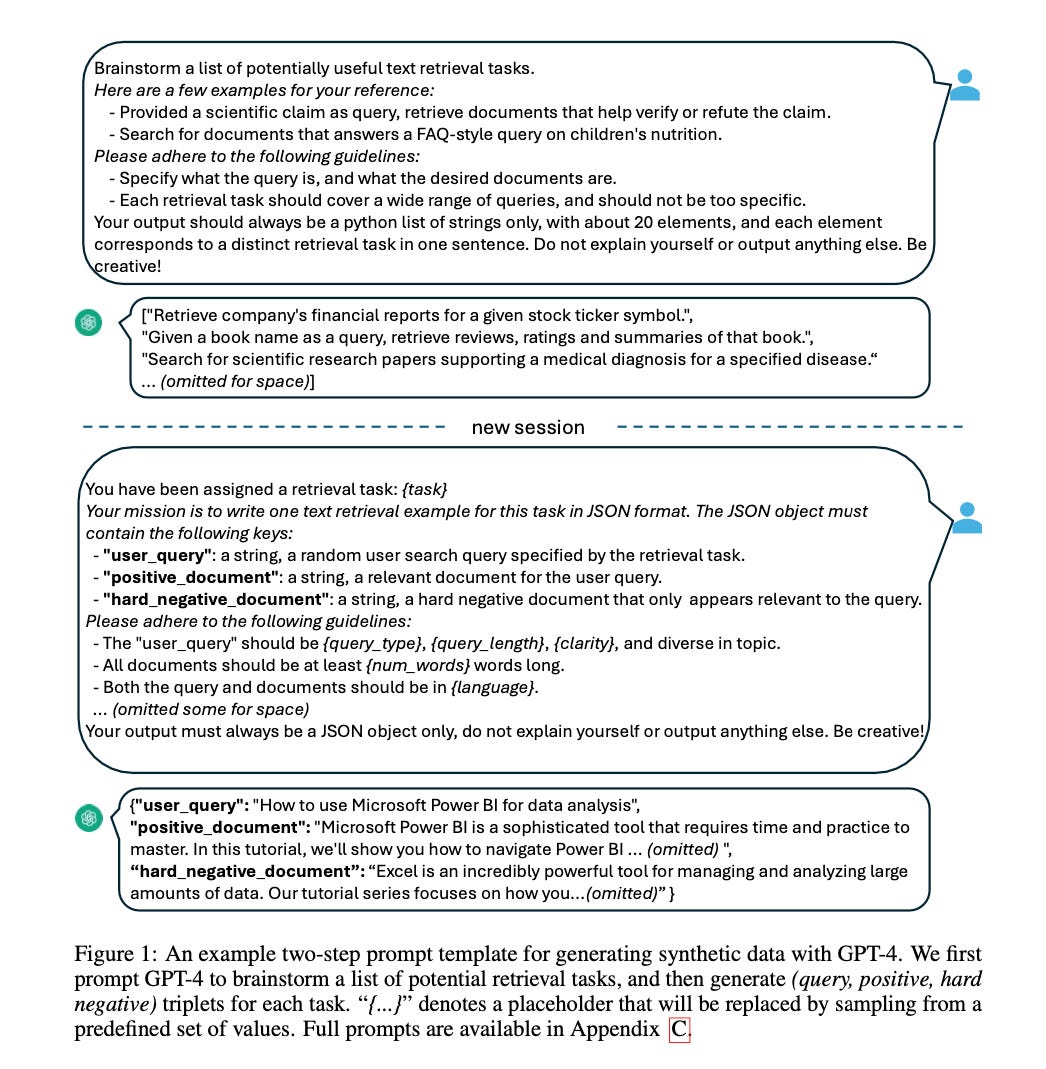

Employing a two-step prompting strategy, LLMs are initially guided to brainstorm a pool of potential tasks and subsequently prompted to generate data tailored to a selected task from the pool.

To enhance diversity, multiple prompt templates are designed for each task type, and the generated data from different templates are combined. Opting for fine-tuning powerful open-source LLMs rather than small BERT-style models, Mistral-7B, fine-tuned exclusively on synthetic data, achieves competitive performance on the BEIR and MTEB benchmarks.

When fine-tuned on a mix of synthetic and labeled data, the model achieves state-of-the-art results, surpassing previous methods by a significant margin (+2%). Impressively, the entire training process is completed in less than 1k steps.

This model has 32 layers and the embedding size is 4096.

Deep Dive

Objective Function: The objective function is defined as the negative logarithm of the matching score between a query (q+) and its corresponding document (d+). The matching score is computed using a temperature-scaled cosine similarity function.

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.