Data Science Interview Challenge

Welcome to today's data science interview challenge! Continuing from last week’s challenge:

Question 1: In the setting of LLM applications, how do you measure whether your new prompt is better than the old one?

Question 2: Why does this matter?

Here are some tips for readers' reference:

Question 1:

Continuing from the previous week's interview challenge, I'm adding the aspect that was omitted last week.

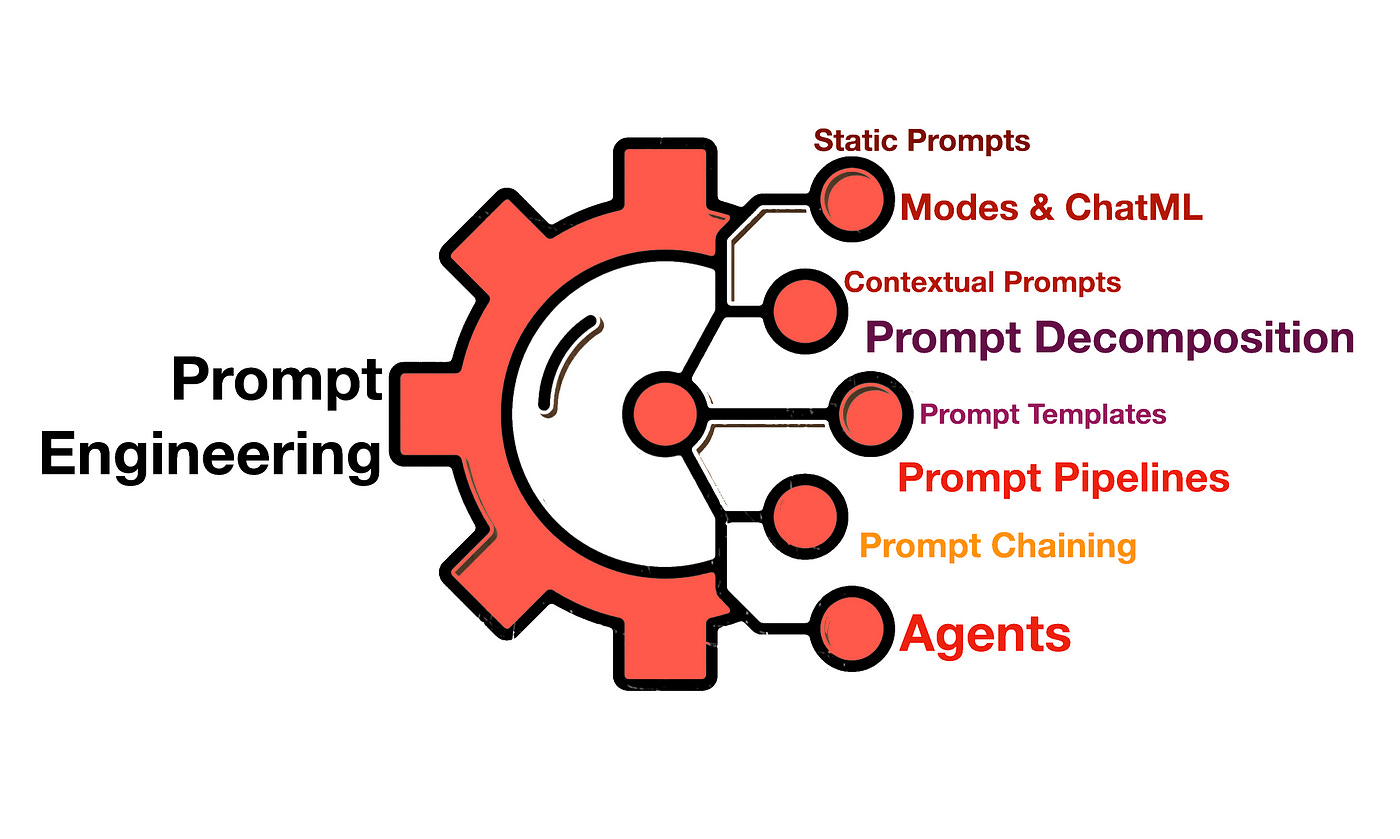

Introducing new prompts often leads to varied outcomes across different scenarios. The conventional approach to evaluating a model's success, typical in traditional machine learning, doesn't directly align with the nature of generative models. Metrics like accuracy (or the ones we talked about last week) might not seamlessly apply, as determining correctness can be subjective and challenging to quantify.

At a broader level, there are two key focal points to consider:

Curate an Evaluation Dataset Incrementally: Develop a dataset tailored to your specific tasks. This dataset will aid in evaluating prompt performance during its development and this data should be built out incrementally.

Identify an Appropriate Metric or Framework for Evaluation: Select a suitable metric or framework to gauge performance. We covered this a little bit last week in Question 2:

Data Science Interview Challenge

·Welcome to today's data science interview challenge! Here it goes: Question 1: Should we use proprietary or open-source when building a LLM application for production? Question 2: How to assess the performance of our LLMs / LLM applications? Here are some tips for readers' reference:

This question as a whole is a complex topic and I’ll write a more detailed post about it next time!

Question 2:

Some key points about why this matters:

LLMs makes a lot of mistakes.

You can tweak the prompts that results in improvements in some cases, but not necessarily means improvements in general.

Building trust with your users is important, ultimately you need to maintain the performance of their tasks.

Let’s check out how this is explained by Josh!

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.