Data Science Interview Challenge

Welcome to today's data science interview challenge! Here it goes:

Question 1: Why is position encoding important for Transformer models?

Question 2: How to generate them?

Here are some tips for readers' reference:

Question 1:

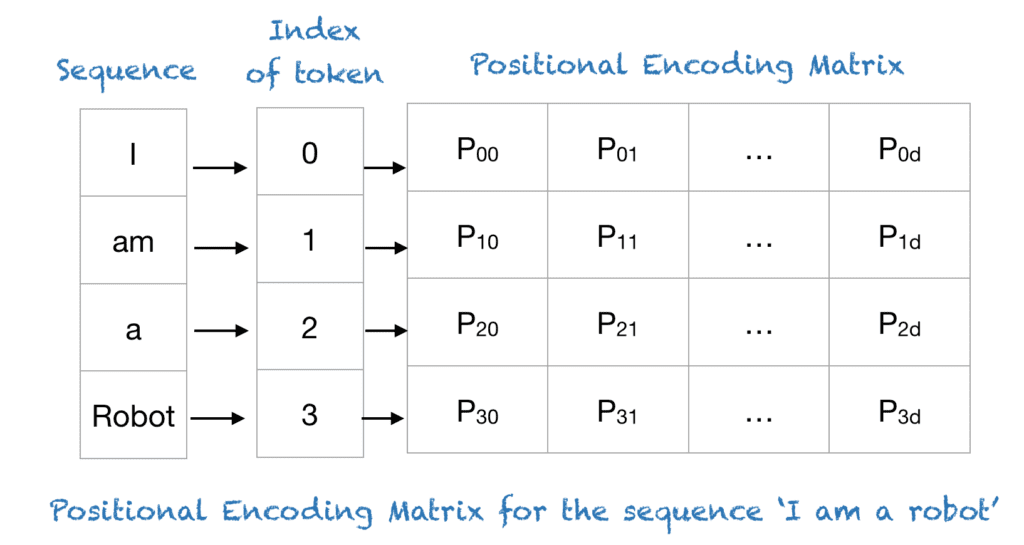

In languages, the order of the words and their position in a sentence really matters. The meaning of the entire sentence can change if the words are re-ordered. When implementing NLP solutions, recurrent neural networks have an inbuilt mechanism that deals with the order of sequences. The transformer model, however, does not use recurrence or convolution and treats each data point as independent of the other. Hence, positional information is added to the model explicitly to retain the information regarding the order of words in a sentence. Positional encoding is the scheme through which the knowledge of the order of objects in a sequence is maintained.

Let’s see how former Rasa Research Advocate Vincent Warmerdam explains it:

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.