AI Orchestrator Beyond LangChain 🦜⛓️

By now, most AI practitioners are likely familiar with LangChain or have had some experience using it. But is there something new and even more promising on the horizon?

Introducing Semantic Kernel

Microsoft's Semantic Kernel presents a concept quite akin to LangChain. It stands as a sophisticated Software Development Kit (SDK) that integrates Large Language Models (LLMs) such as OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. The real magic happens when you harness Semantic Kernel's potential to define plugins that can be intricately connected with just a few lines of code.

What makes Semantic Kernel special, however, is its ability to automatically orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

Kernel and Plugins

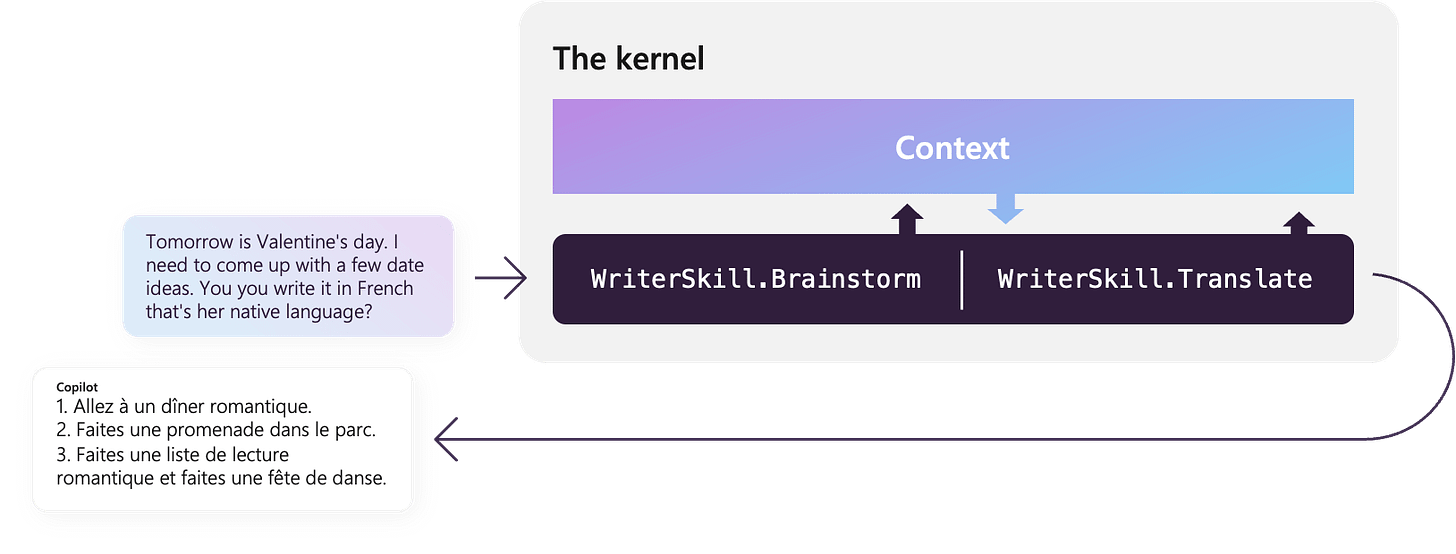

Within this framework, the term "kernel" designates a processing engine instance responsible for fulfilling a user's specific request by assembling an array of plugins.

In the above example, the kernel fuses together two plugins, each serving different functions. Similar to the idea of UNIX kernel but this time we are chaining together AI prompts and native functions, rather than programs.

Within the WriteSkill plugin, there can be multiple functions, each accompanied by its own semantic description, exemplified as follows:

Planner

The Planner orchestrates which function to call for a specific user task. Just as its name suggests, it plans for each request. In the above example, the Planner would likely employ the ShortPoem and StoryGen functions, leveraging the provided semantic descriptions to fulfill the user's query.

For those who are familiar with the earlier era of chatbot development predating ChatGPT, the term "intent" may ring a bell.

In a way, the functions are analogous to the concept of "intents," while the "description for model" stands in as the semantic prompt, indicating potential models tailored for different purposes.

The Planner harnesses the power of AI to harmonize and arrange the plugins registered within the kernel as a sequential series of tasks, aiming to achieve a specific goal.

This is a powerful concept because it allows you to create atomic functions that can be used in ways that you as a developer may not have thought of.

An example

What’s powerful about a planner is that it automatically finds plugins registered in the kernel, incorporating them to generate plans. The following question is a math problem that is rather difficult for an LLM to solve directly as it requires multiple steps.

ask = "If my investment of 2130.23 dollars increased by 23%, how much would I have after I spent $5 on a latte?"

plan = await planner.create_plan_async(ask, kernel)

# Execute the plan

result = await planner.execute_plan_async(plan, kernel)

print("Plan results:")

print(result)Working behind the scenes, a planner employs the power of LLM prompt to formulate a plan.

The plan materializes through a set of rules, and it looks like the following in this example::

{

"input": 2130.23,

"subtasks": [

{"function": "math_plugin.multiply", "args": {"number2": 1.23}},

{"function": "math_plugin.subtract", "args": {"number2": 5}}

]

}Takeaways

Microsoft’s Semantic Kernel emerges as a very powerful tool for building LLM applications. Its planner can automatically chain your defined functions, and with the ever-evolving AI landscape, you can harness its potential to create increasingly sophisticated applications!

Happy building!

Thanks for reading my newsletter. You can follow me on Linkedin or Twitter @Angelina_Magr!

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.