There were “about 6,790,000,000” stories about AI in 2023. I guess we contributed to a small percentage of the landscape. ❤️

97% of Business owners already believe that generative AI tools such as ChatGPT will have a positive impact to their business (Forbes).

There are a lot of predictions circulating from all corners. We’ve compiled some of the most interesting insights from top Venture Capitals that we resonate with most for you!

Big things that awed us!

2023 was THE incredible inaugural year of AI 🥳✨ . Big things we’ve seen so far:

Chatbots: ChatGPT, Perplexity, Bing Chat and Google Bard

Images: DALL-E 3 and MidJourney V6

Videos: Pika.art

AI regulations: EU’s landmark AI Act (Parliament Version), the Biden Administration issued guidelines, whitepaper “Frontier AI Regulation: Managing Emerging Risks to Public Safety”(FAR)

What’s next?

AI’s spring is in the application layer

I’m excited about AI in biotech, genome, climate and industrial applications.

- Masha Bucher, Founder and General Partner at Day One Ventures

I predict we’ll see narrower AI solutions: narrowly tailored, purpose-built AI.

- Olivia Moore, Partner at Andreessen Horowitz

Startups relying on OpenAI may face platform risks, and investment interest in these companies could dwindle. According to Day One, they have stopped considering such deals altogether.

Many people don’t like the word “moat”, so let’s avoid using it.

At the end of the day, the use of AI aims to solve pain points for its customers, whether they are businesses or individuals.

The advancement in AI empowers more innovative solutions to today’s status quo, and many use cases are yet to be discovered. However, the integration between customer needs with AI technology is the key to the future applications that can truly endure.

This is why “AI’s spring is in the application layer”.

The deeper your expertise in a specific domain, the more likely you are to craft novel and improved solutions to age-old problems, increasing your chances of success.

Multi-modality will be the norm

In 2024, the convergence of data modalities—text, images, audio—into multimodal models will redefine AI capabilities.

- Cathy Gao, Partner at Sapphire Ventures

Voice-first apps will become integral to our lives.

- Anish Acharya, GP at Andreessen Horowitz

Innovative startups leveraging these multimodal models will not only enable better decision-making but also elevate user experiences through personalization. Expect to see groundbreaking applications across diverse sectors such as manufacturing, e-commerce, and healthcare.

We’re going to see multi-modal retrieval & multi-modal inference take center stage in AI products in 2024. AI products today are mostly textual. But users prefer more expressive software that meets them in every modality, from voice to video to audio to code and more.

- Rak Garg, Principal at Bain Capital Ventures

Scaling up these systems brings us to a place where software goes beyond expectations, delivering more accurate and human-like results. “Imagine easily drawing answers or making calls in your own voice, cutting down on long meetings.” Also, think about teamwork where AI and humans work together for the best results.

Open-source will catch up the game

We predict that more open-source models will be released in 2024, and we are especially looking at large tech companies to be one of the major contributors.

- Vivek Ramaswami, Partner at Madrona and Sabrina Wu, Investor at Madrona

The marquee model builders who have historically maintained proprietary models will begin open-sourcing select IP while releasing new benchmarks to reset the conversation around benchmarking for AGI.

- Chris Kauffman, Partner at General Catalyst

Open source LLMs and vision models will catch up in terms of performance (if not yet), it’s a matter of time.

In addition, recent years have seen remarkable achievements in open-source projects.Venture capitalists anticipate the evolution of some of these open-source models into standalone companies, securing substantial funding rounds (some examples in the above chart). If you have promising ideas for open-source projects, don’t hesitate!

AI Agents will form your future teams

Previously we talked about AutoGen - AI agents collaborating to tackle human-assigned tasks. No doubt this trend will continue in 2024.

What possibilities does it unlock?

Possibly more than our current imagination allows.

AutoGen - Agents🤖That Will Take Your Job🙀

Hold on a moment… Don’t worry, nobody is taking your job, just yet! Today’s post is inspired by the remarkable AutoGen framework developed by Microsoft. The concept of allowing Language Models (LLMs) to engage in conversations with each other is such an ingenious idea!

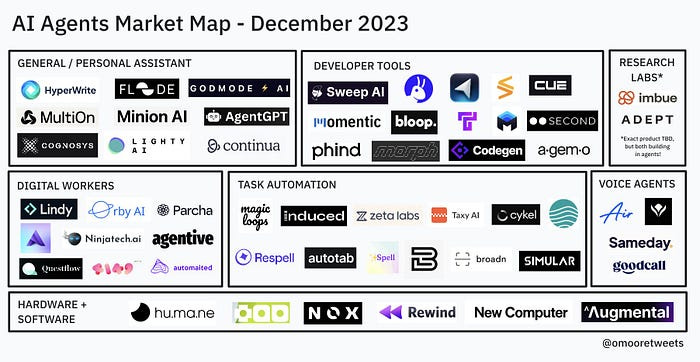

Here’s a glimpse of the current startup landscape in this field.

Expect to see more innovative AI agents solutions capable of executing complex tasks that may be multi-modal, long sequence of reasoning steps and demand sophisticated orchestration among models and agents.

Emergence of Small Language Models (SLM) at the Forefront

Models are becoming “smaller” in size, but more accurate and more relevant in meeting our requirements. The spotlight is moving away from larger, more generalized language models towards those that are compact, agile, and often specialized, addressing niche or long-tail issues.

The winners in this evolving landscape will be those who invest in developing their own models generalized or small foundational models to plug gaps in the generalized space. This strategy not only increases accuracy and effectiveness but also reduces cost overheads. Smaller models are not only cheaper to run but also quicker to adapt and easier to manage.

For instance, Microsoft's 1.3 billion-parameter Phi-1 achieved state-of-the-art performance in Python coding, specifically on the HumanEval and MBPP benchmarks. The 1.3 billion-parameter Phi-1.5 model, focusing on common-sense reasoning and language understanding, matched the performance of models five times its size. The latest release, Phi-2, a 2.7 billion-parameter language model, showcases exceptional reasoning and language understanding capabilities, setting a benchmark for performance among base language models with less than 13 billion parameters.

AI regulation is coming

AI is moving faster than ever, and we don’t know what can go wrong. There are already experts cautioning against the potential “"the risk of extinction from AI”.

Keep reading with a 7-day free trial

Subscribe to The MLnotes Newsletter to keep reading this post and get 7 days of free access to the full post archives.